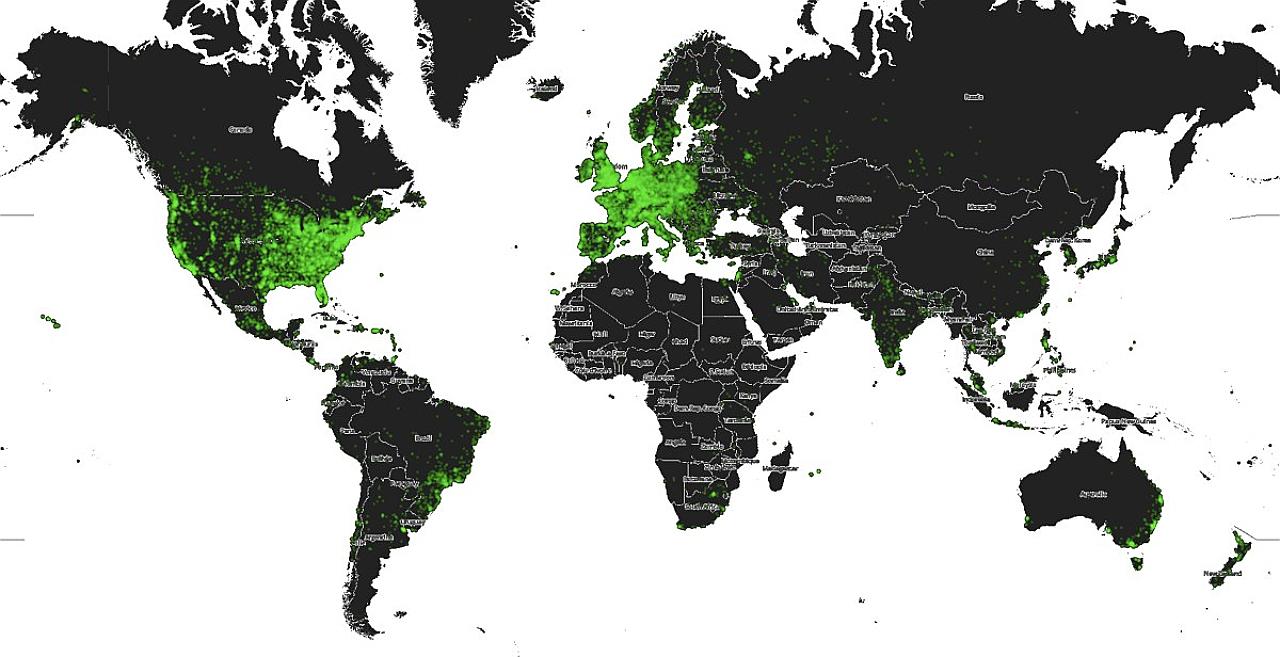

The advancement of technology has resulted in a massive increase in scientific data collection, all of which requires processing and analysis. Virtual citizen science (VCS) platforms allow the internet community to both access this data, and also carry out analysis to assist the scientific teams involved (Reed et al., 2012). Launched in 2007, the Zooniverse is a suite of VCS platforms covering a range of disciplines, and to date has over a million registered users worldwide.

Location of Zooniverse Volunteers (taken from blog.zooniverse.org)

The Zooniverse platforms not only differ in terms of the discipline involved, but also across a number of other factors. These include the types of psychophysical tasks and judgements (Pelli & Farell, 2010), along with their complexity, and additionally users have differing levels of freedom in choosing the order of tasks they attempt. Differences also exist regarding website take-up, specifically regarding visits per day, time spent analysing and visitor loyalty.

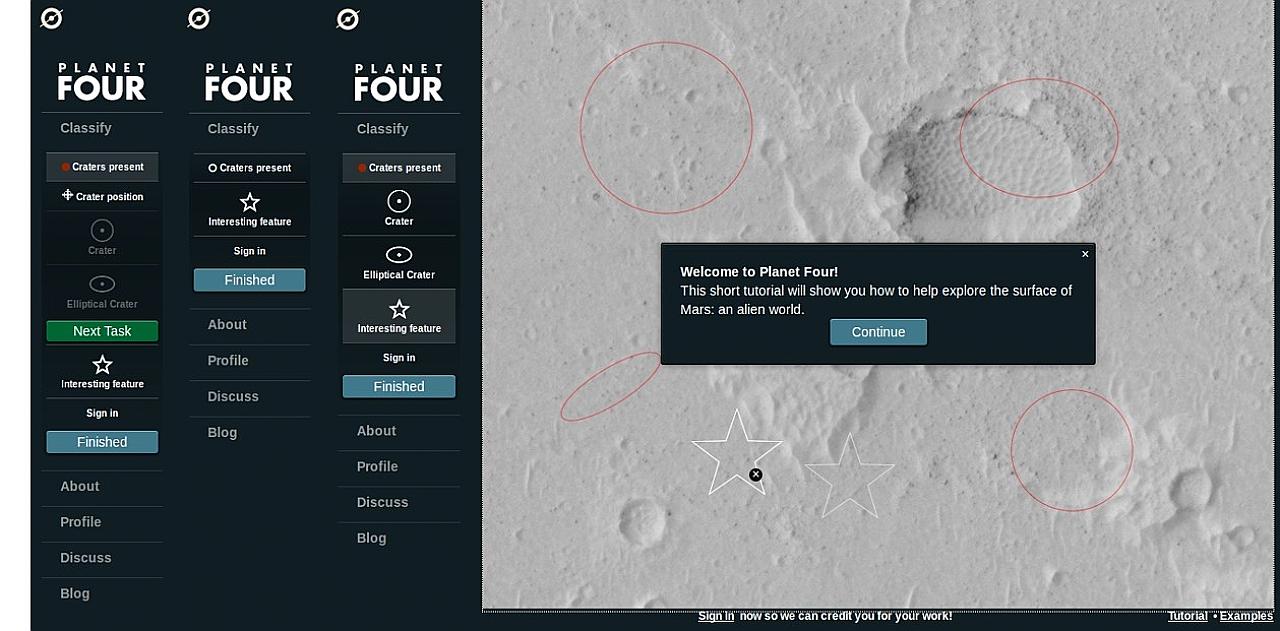

In order to investigate if there is a link between task design and user-website engagement, a Zooniverse site has been developed based on the existing platform that studies the Martian surface, Planet Four. A new science case has also been derived, requiring users to mark craters found in imagery of the Cerberus Fossae area on the planet surface. NASA’s InSight mission is due to arrive in 2016, carrying instrumentation to study the seismology of the planet. Crater counting can help derive the age of the surface and hence the seismic activity expected, allowing optimisation in instrument design, sampling strategy and data interpretation (Taylor et al., 2013).

To allow users to carry out the crater counting science case, 3 different interfaces have been developed that differ in terms of task type, complexity and user freedom:

Stepped, ramped & full interfaces of crater counting Planet Four (left – right)

30 participants took part in trialling the new platform, marking craters using all three interfaces on 78 selected images of the Cerberus Fossae region. Initial analysis of results suggest that changes in interface design can alter user behaviour in terms of the number of markings made, the size of markings made and the time users spend analysing each image.

Future investigations will look at the accuracy and variance of participant results when compared to expert planetary scientist data, and also look at users’ feedback regarding their thoughts on the interfaces and the difficulty of the tasks involved.

This author is supported by the Horizon Centre for Doctoral Training at the University of Nottingham (RCUK Grant No. EP/G037574/1) and by the RCUK’s Horizon Digital Economy Research Institute (RCUK Grant No. EP/G065802/1)